Continual Neural Mapping: Learning an Implicit Scene Representation from Sequential Observations

Abstract

Recent advances have enabled a single neural network to serve as an implicit scene representation, establishing the mapping function between spatial coordinates and scene properties. In this paper, we make a further step towards continual learning of the implicit scene representation directly from sequential observations, namely Continual Neural Mapping. The proposed problem setting bridges the gap between batch-trained implicit neural representations and commonly used streaming data in robotics and vision communities. We introduce an experience replay approach to tackle an exemplary task of continual neural mapping: approximating a continuous signed distance function (SDF) from sequential depth images as a scene geometry representation. We show for the first time that a single network can represent scene geometry over time continually without catastrophic forgetting, while achieving promising trade-offs between accuracy and efficiency.

Problem Setting

Continual neural mapping not only alleviates the catastrophic forgetting caused by the constant distribution shift of sequential observations, but reduces the expensive cost of batch retraining.

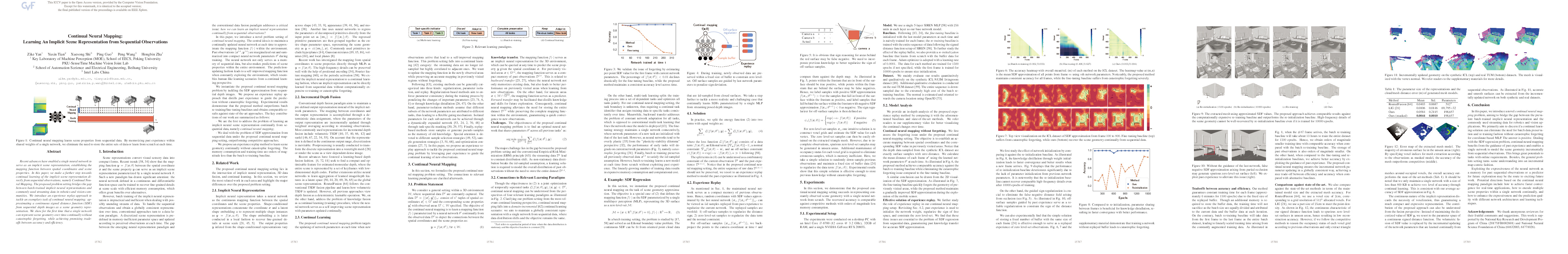

Method

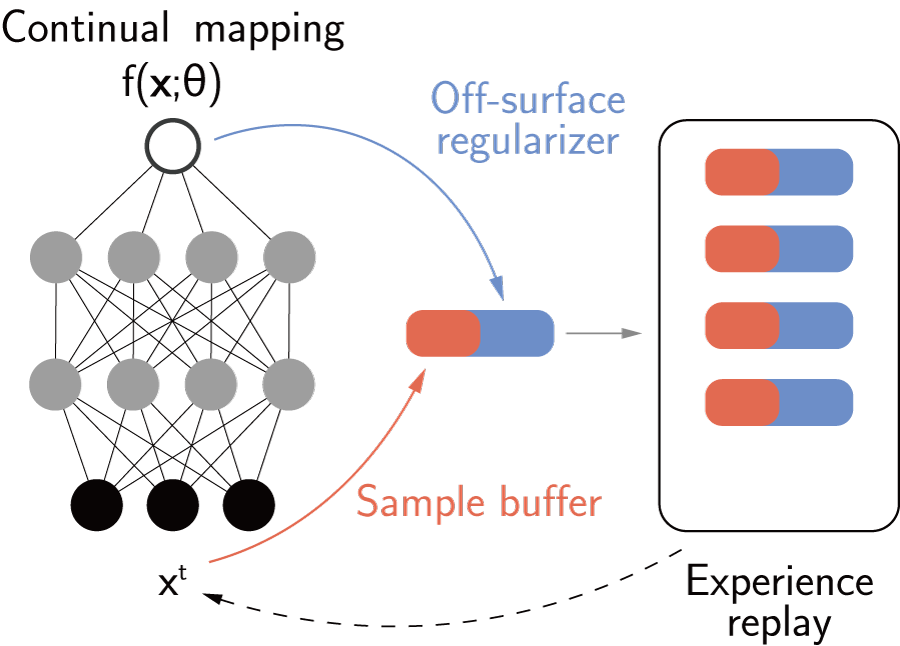

We adopt an experience-replay based continual learning strategy. Past experiences are leveraged to supervise both the zero-crossing surfaces and the non-surface regions. Note that off-surface samples behind the surface (red ones) may lead to false negatives. We modify the regularization terms of SIREN to handle the non-closed geometry.

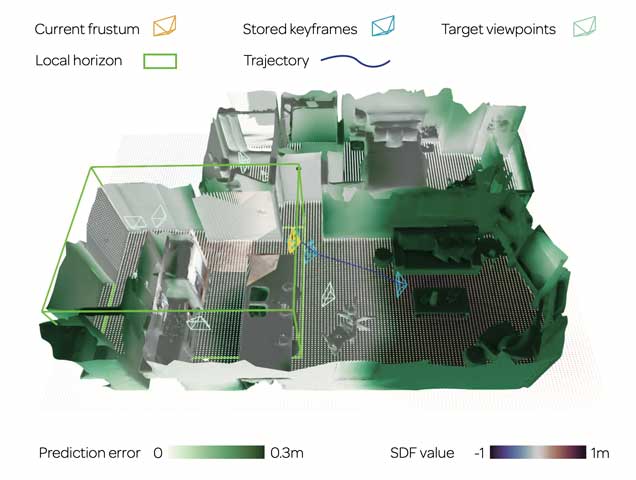

Reconstruction Results

The scene geometry is gradually updated given sequential observations, encoded by a <800kB MLP compactly.

Open Problems

We address two critical problems to be explored under the proposed continual neural mapping setting:

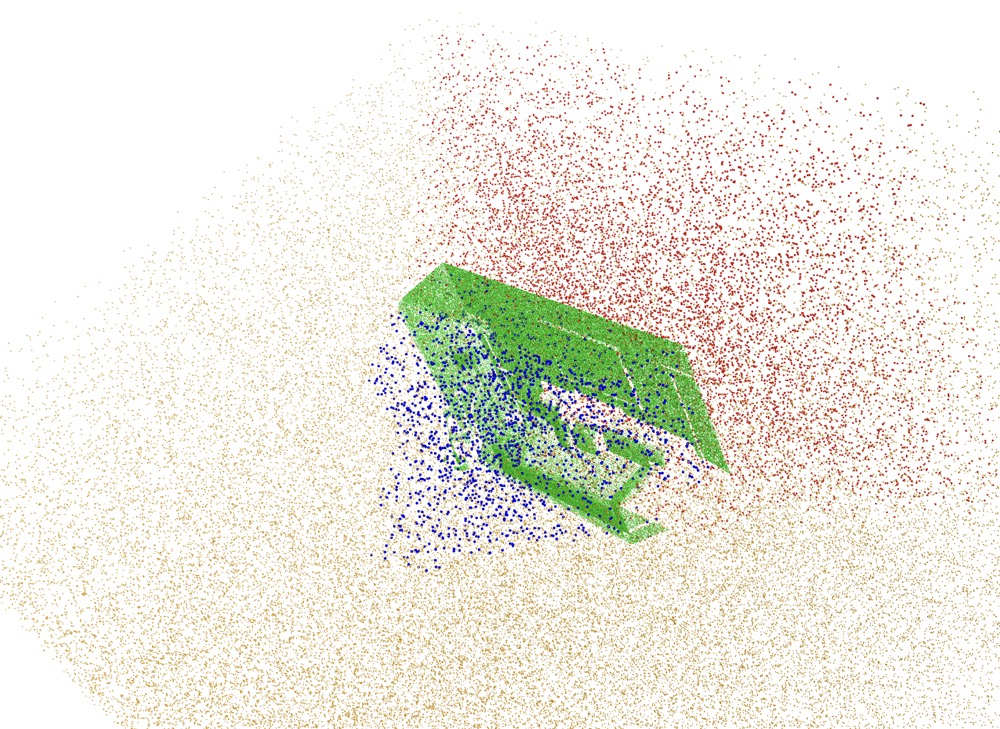

- Stability-plasticity dilemma The neural network is expected to adapt efficiently given new observations, while avoiding forgetting in previously-visited areas. The trade-offs between adaptation (network plasticity) and forgetting (network stability) are critical for online mapping systems such as iMAP, NICE-SLAM, iSDF.

- Uncertainty quatification The continuous nature of the implicit neural representation leads to spurious zero-crossing surfaces in unseen areas, thus requiring accurate quantification of the forward prediction confidence.

Our Related Projects

Citation

@inproceedings{Yan2021iccv,

title={Continual Neural Mapping: Learning an Implicit Scene Representation from Sequential Observations},

author={Yan, Zike and Tian, Yuxin and Shi, Xuesong and Guo, Ping and Wang, Peng and Zha, Hongbin},

booktitle={Intl. Conf. on Computer Vision (ICCV)},

pages={15782--15792},

year={2021}

}